Docker Demystified: Laying the Foundation for a Secure Containerized Future

Docker has become a cornerstone of modern DevOps by making it easier to package, distribute, and run applications across different environments. Its ability to solve many traditional development and deployment challenges has led to widespread adoption for improved scalability and efficiency.

But with its advantages come security concerns. Misconfigurations, weak authentication, exposed APIs, and poor privilege management can open the door to serious threats like container breakout, privilege escalation, and data leaks. To address these risks, integrating Docker with Security Information and Event Management (SIEM) tools enables real-time monitoring and threat detection.

Before we dive into securing Docker and its integration with SIEM in Part 2, it's important to first understand the fundamentals—how Docker works, its architecture, and how to get started. This blog lays the groundwork to help you confidently approach Docker security in the next part of our series.

To understand Docker’s impact, let’s first know the world without Docker—a time when the following challenges were too common.

The "Before Docker" Era (Implicit):

The common issues while developing and running applications across different systems include:

- Inconsistent Application Setups: Running applications across different environments often led to errors due to mismatched configurations.

- Manual Software Installation: Setting up and managing software manually was a tedious process, prone to errors and delays.

- Dependency Conflicts: Applications often required different versions of tools and libraries, leading to compatibility issues and system instability.

- Scalability Challenges: Expanding applications required significant time and resources, complicating the process of meeting growing demands.

- Security Vulnerabilities: A lack of isolation between applications is a flaw, potentially leading to the entire system compromise.

- Deployment Frustrations: The infamous “It works on my machine” problem became a recurring theme, causing deployment failures and stress.

That’s where Docker comes in.

What is Docker?

Docker is an open-source platform that enables developers to build, deploy, run, update and manage containers. [Containers are standardized executable components. It combines application source code with the operating system libraries and dependencies required to run code in the given environment].

According to 6sense, Docker dominates the containerization market with an impressive 87.85% market share. It is widely adopted by > 63,000 companies globally, including major players like Microsoft, NVIDIA, and GitHub.

The Docker Hub, a central repository for container images, has seen remarkable growth, with 318 billion total pulls and over 8.3 million application container image repositories. Additionally, Docker Desktop installations have reached 3.3 million, reflecting its widespread use among developers.

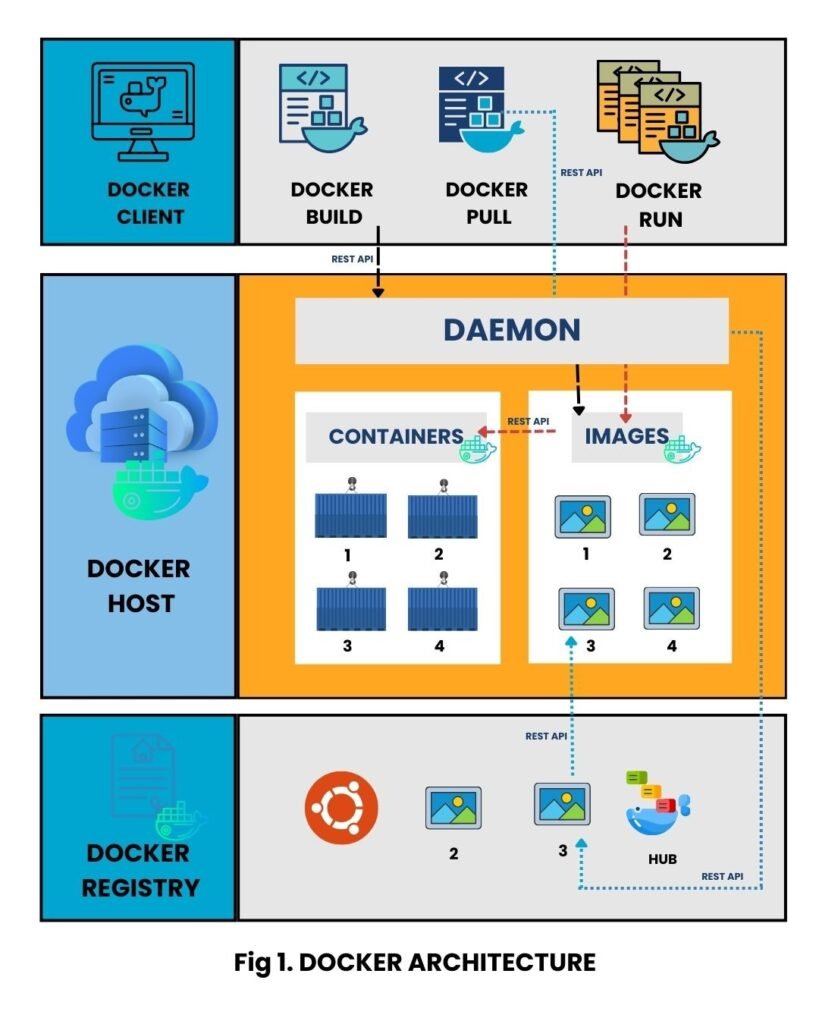

Docker Architecture and Terminologies:

Here’s a detailed breakdown of the Docker architecture and key terms:

Docker Host: The physical or virtual machine running the Docker Engine. [Linux or another Docker-Engine compatible OS].

Docker Engine: It is the underlying client or server application that manages Docker. It consists of Docker Daemon, Docker API and a command-line interface (CLI).

- Docker Daemon: A background service that creates and manages Docker objects.

- Docker API: A RESTful interface used by the Docker client to communicate with the daemon.

- Docker CLI: The command-line interface for users to interact with the Docker daemon.

Docker Objects: The components of a Docker deployment, including images, containers, networks, volumes, plug-ins, etc.

- Docker Networks: Ensures communication between containers and with the outside world.

- Docker Volumes: Provide persistent storage for container data.

- Docker Plug-ins and Extensions: Mechanisms to extend Docker Engine and Docker Desktop functionality.

- Docker Images are blueprints containing everything an app needs. When launched, they become Docker Containers, lightweight, portable mini servers that run applications in isolated environments.

Docker file: A text file containing instructions for building Docker images, automating the image creation process. It's essentially a list of CLI instructions that Docker Engine will run to assemble the image.

Docker Build: The command used to build Docker images from a Docker file.

Docker Hub: A public repository for sharing and downloading Docker images. It holds over 100,000 container images sourced from commercial software vendors, open-source projects and individual developers.

Docker Registry: A scalable storage and distribution system for Docker images, allowing for version tracking using tags.

Docker Desktop: An application for Mac and Windows that provides a user-friendly environment for running Docker, including the Docker Engine, CLI, Compose, and Kubernetes.

Docker Compose: A tool for managing multi-container applications defined in a YAML file. It specifies which services are included in the application and can deploy and run containers with a single command.

Installing Docker:

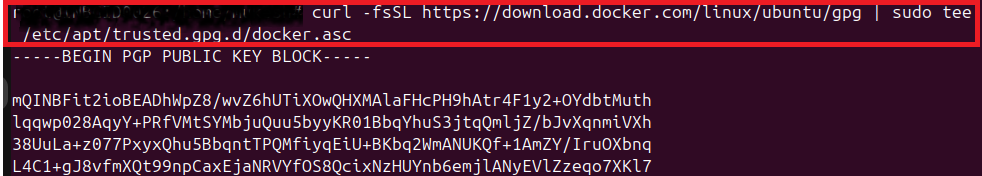

- Install required dependencies

- Add Docker's offcial GPG key

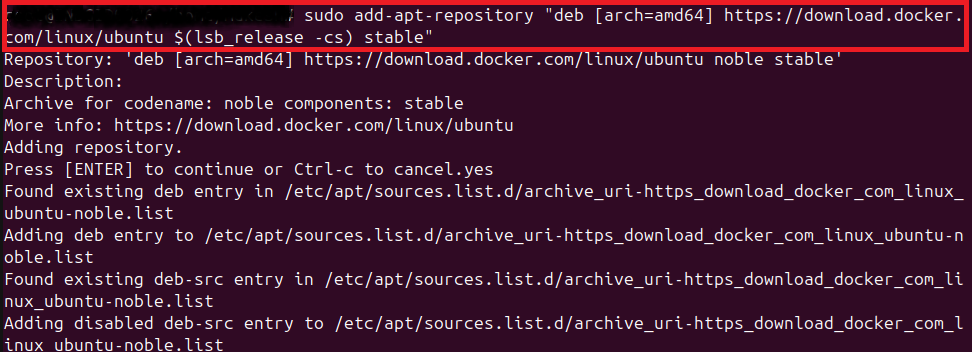

- Add docker’s APT Repository

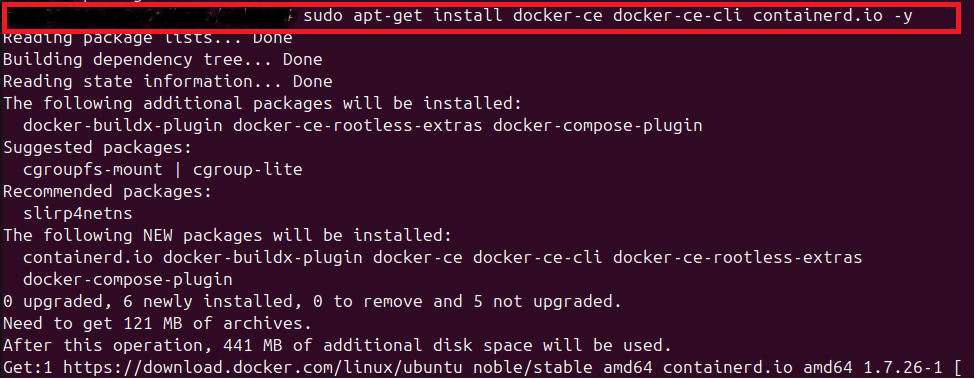

- Install docker

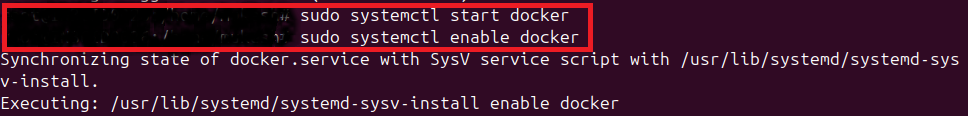

- Start docker service and enable docker to start on boot

- Verify docker installation

Steps to install MySQL as an application on Docker:

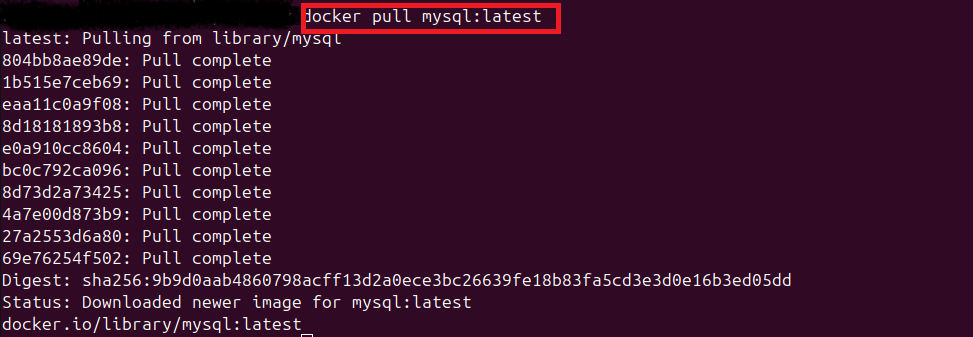

Docker streamlines the deployment process, making it faster and more reliable. Let’s look at the ease of running a service like MySQL in a container here.

- Pull MySQL Image:

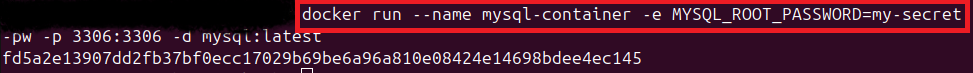

- Run MySQL docker container:

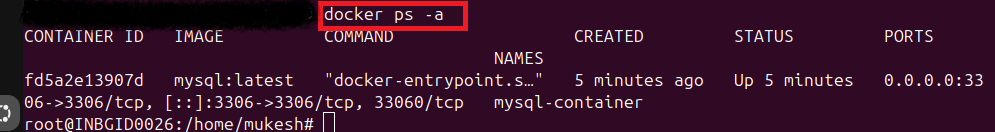

- Verify the MySQL container is running

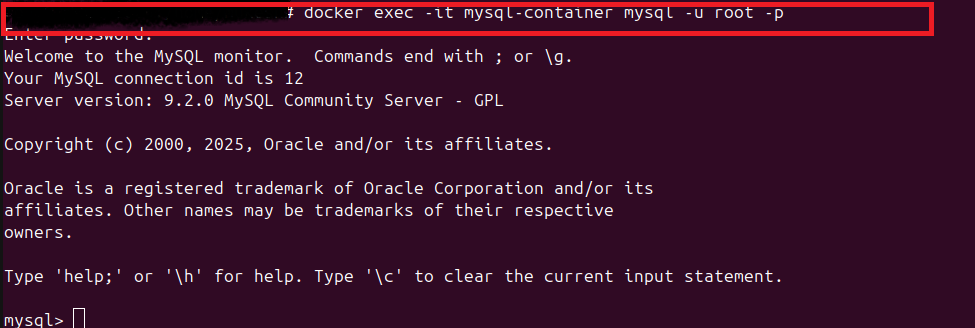

- Connect to MySQL

Docker: The Game-Changing Benefits You Need to Know

- Portability

A Python web app running on a developer’s laptop might break when moved to a server due to missing dependencies or different software versions. But with Docker, everything—Python, libraries, and settings is packaged in a container. No matter where you run it (laptop, AWS, or an on-prem server), it works exactly the same.

- Efficiency

Running 10 VMs means loading 10 separate OS instances, consuming huge memory and CPU. But 10 Docker containers can run on a single OS kernel, starting in seconds instead of minutes, with minimal resource use.

- Scalability

If a Node.js web app runs in a single container and traffic surges, Docker allows you to deploy multiple instances instantly. paired with Kubernetes, your app can auto-scale.

- Security

Running apps without Docker is like leaving your server wide open. Without isolation, a security flaw in one app could compromise the entire system.

With Docker, each application runs in its own containerized environment, preventing one app from interfering with another. For example, if a Python web app gets exploited, it won’t affect other services like a MongoDB database running in a separate container. Built-in security features like image scanning, least privilege permissions, and role-based access control (RBAC), and Docker becomes a fortress for your applications.

Conclusion:

We kicked off with the headaches of life without Docker - manual setups, dependency nightmares, and “but it worked on my machine” drama. Then, Docker swooped in like a superhero, making deployments smooth, portable, and predictable.

We unboxed Docker’s magic, broke down its core components, and tested its powers by running MySQL in a container - effortless and hassle-free!

Docker is a transformative technology that tackles fundamental challenges in application development, deployment, and management. By leveraging containerization, Docker offers significant benefits in terms of consistency, portability, efficiency, scalability, and security.

Next Up!

As mentioned earlier, though Docker makes developers’ work easy, security gremlins lurk in the shadows. In the upcoming part of this Docker blog series, we will address security risks associated with Docker and how to mitigate them with SIEM solutions- Wazuh.

Advisory & Compliance Services

Audit & Assessment Services

Managed Security Services

Governance & Compliance Framework Development

Cyber Key Performance IndicatorsCISO Advisory

Advisory and Compliance ServicesStandard & Regulatory AdvisoryBusiness Continuity & Disaster Recovery PlanningIncident Response ReadinessData Privacy

Audit and Assessment Services

Cloud Security Assessment

Cyber Maturity Assessment

Privacy Impact Assessment

Red Teaming Service

VAPT

Managed Security Services

SOC as a Service

Third Party Risk Management

Vulnerability Management Services